By Joscha Wasser 1, Timo Haavisto 2, Victor Blanco Bataller 2, Konrad Bielecki 1, Daria Vorst 1, Mika Luimula 2, Marcel Baltzer 1

1 Fraunhofer Institute for Communication, Information Processing and Ergonomics FKIE 1

2 Turku University of Applied Sciences, School of ICT, Futuristic Interactive Technologies 2

The current geopolitical and epidemical situation has reinforced the need for transnational cooperation and a need to be able to conduct conferences, training, design, or operations in a digital space. The metaverse can provide a virtual space where users can meet independently from their physical location to design, evaluate, and train together and subsequently, it has received a lot of visibility. In fact, the metaverse was first listed on the Gartner hype cycle for emerging technologies in 2022 (Gartner 2022), and according to Citibank, the global metaverse economy could be worth $13 trillion by 2030 (Fortune 2022). In June 2023, Apple announced its new Vision Pro AR headset with a price of $3,499$ which is expensive for most people (CNET 2023). The industrial metaverse is expected to become popular as the first application area. For example, Nokia is expecting the industrial metaverse to lead the commercialization of the metaverse (Nokia, 2023). According to Kang et al. (2022), although the industrial metaverse has recognized the potential in to bring changes to industrial areas, it is still in its infancy. The industrial metaverse as a digital twin of the workplace enables interaction with physical objects in real-time and improves the visualization of cyber-physical systems (Lee & Kundu, 2022). Emerging technologies such as AI, digital twins, and XR of Industry 4.0 will be the basis for the industrial metaverse realization (Mourtzis et. al., 2022). So metaverse as a part of Industry 5.0 will foster innovation and improve productivity (Mourtzis, 2023). As an example of the potential of the industrial metaverse, NVIDIA has applied the Omniverse platform with BMW for building industrial metaverse applications to go digital-first to optimize layouts, robotics, and logistics systems (NVIDIA, 2023). In conclusion, specific industrial applications, however, can already be created already with significant benefits, as the military training method introduced in this paper, will demonstrate.

Military forces typically train using three different methods, desktop-based simulations for tactical and communication training, high-fidelity simulator setups based on real interiors, and real equipment which at times is modified to accommodate a trainer. These methods however are either extremely expensive to operate, maintain and upgrade or too different from the real experience to provide authentic and effective training. Off-the-shelf simulation software such as Virtual Battlespace by Bohemia Interactive is used by almost all NATO members, for example, to train their soldiers in tactical behaviors, but their interaction is normally limited to a keyboard, a mouse, and an audio connection (Bohemia Interactive Simulations 2023). The software can connect large numbers of users in a singular virtual environment and simulate opposing forces, but the immersion of the single user remains low. At the other end of the spectrum are real vehicle interiors adapted as a simulator, which offers an authentic experience with the external views as a digital stream into the real vision systems, similar to flight training simulators used in civil aviation for pilot training. For ground forces the German Army is currently constructing a “virtual action trainer” (Fig. 1) which is a VR-based simulator that allows up to four participants to physically move within a 200m² space, resolving, for example, a hostage situation (Bundeswehr 2023).

The aim is to combine the benefits of a mostly virtual simulator, flexibility, and cost-effectiveness with the immersion of a real vehicle interior, providing the fidelity required for an authentic training and development platform. To improve the effectiveness and to train tactical behavior, a singular setup needs to be networked to allow access to a large number of users from various locations using different means to interact with the simulator.

1. Tangible XR

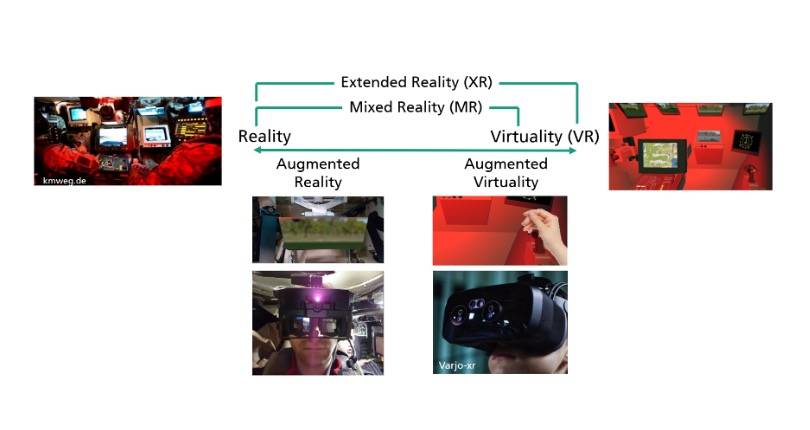

With the advancement in computing power and increasing quality of head-mounted displays, virtual reality applications are becoming powerful tools for gaming or training applications. On the spectrum of virtuality and reality are several definitions such as augmented reality (AR) which enhances a predominantly real vision through virtual icons or highlights or mixed reality (XR) which combines real features with a digital environment or model (Fig.2). According to XR4All (2023) eXtended Reality (XR) can be seen as an umbrella term used for Virtual Reality (VR) and Mixed Reality (MR), including Augmented Reality and Augmented Virtuality (see Fig. 2). Virtual reality is defined as a method of interacting with a computer-simulated environment (Das et al. 1994). Augmented reality, in turn, is a technology that allows computer-generated virtual imagery to exactly overlay physical objects in real-time (Zhou 2008). Augmented Virtuality, on the other hand, is a method that allows real-world objects, like e.g. the user’s hands, to be displayed inside the virtual environment, requiring VR glasses combined with a front camera. Metaverse describes a virtual universe shared amongst its users allowing them to interact with each other within the boundaries of the platform (Nevelsteen 2017).

Those types of virtual environments are typically accessed using VR headsets and handheld controllers and aim to create an immersive experience by giving the user a sense of presence within the context of a virtual world. The illusion is perceptual but not cognitive, as the perceptual system identifies the events and objects and the brain-body system automatically reacts to the changes in the environment, while the cognitive system slowly responds with a conclusion that what the person experiences is an illusion (Slater 2018). This can be improved by using more immersive systems: “Immersion is user’s engagement with a VR system that results with being in a flow state. “Immersion to VR systems mainly depends on sensory immersion, which is defined as “the degree to which the range of sensory channel is engaged by the virtual simulation” (Kim et al. 2018, Berkman et al. 2019)

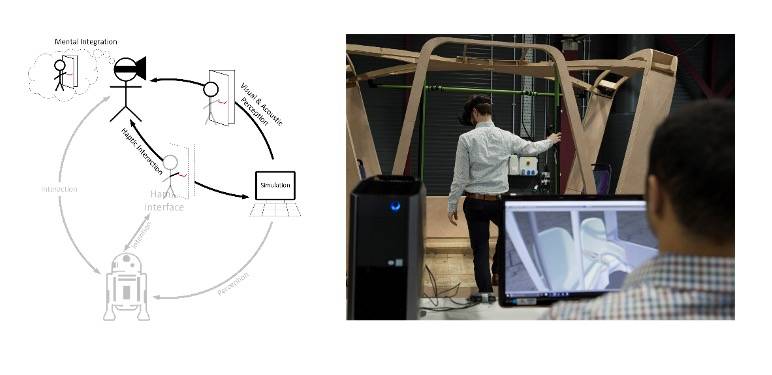

The Tangible XR method, an extension of Mixed Reality, aims to address the limited immersion caused by the lack of haptic feedback, by combining virtual imagery with real-world objects to create a stronger immersion for the user by adding a haptic feedback loop. It enables the user to interact with real-world objects that are part of the virtual model or environment but are also present in reality (Fig. 3). That way a user can e.g. grab and hold onto a handrail in a mockup of a driverless shuttle whilst moving through the vehicle, providing the user with real touchpoints in a virtual environment. Using this method only the components with which the user comes in direct contact need to be mocked up, whilst other features are purely digital. This allows for fast and cost-effective design changes during a user trial, as for example in the case of the driverless shuttle, different window configurations could be evaluated in a single setup.

In the military context, Tangible XR can be used as a development and evaluation tool for new battle tank interiors and the systems within them (Fig. 4). Using this approach, a different version of a component such as a monitor for a vision system can be evaluated using the same context, without leaving the immersive environment with the click of a button. By providing haptic interfaces the users are familiar with, such as the two-hand controller for the gunner in an IFV Puma, the degree of immersion is greatly increased.

The Tangible XR simulator developed at the Fraunhofer Institute for Communication, Information Processing, and Ergonomics, as part of a project funded by the German armed forces (BAAINBw K1.2), is based on an IFV Puma interior and is mainly constructed from aluminum profiles. Just like in the real vehicle, an AUTOFLUG Safety Seat SDS was integrated at each of the three positions, which can be manually adjusted to the required seating position. The driver space also features a replica of the steering wheel which was manufactured using rapid prototyping and a set of pedals. The other positions, for the commander and the gunner, feature hand controllers which are also reproduced from the originals, including the main functions, for example, the zoom switch and or the control of the turret. A further feature is the flap mirrors which are located at head height on all positions and allow the crew to view the exterior whilst working below the hatch. Those can be manually adjusted to provide the correct viewing angle. All these features generate sensor data, recorded using potentiometers and switches, in order to translate the functionality into the simulation.

2. Metaverse

Currently, none of the leading VRSP (Virtual Reality Social Platform) technologies include the means for social communication, hands-on experiences, and the integration of digital twins and have unresolved challenges such as update management, number of simultaneous users, usability, user experience, license policies, customization, limited user interaction, and user data gathering. Futuristic Interactives Technologies (FIT), a research group at Turku University of Applied Sciences, started developing its own metaverse technology, nowadays licensed by ProVerse Interactive Ltd in spring 2021, with the vision to create a technology that overcomes these challenges and allows users to have hands-on experiences in digital twins (Luimula 2022).

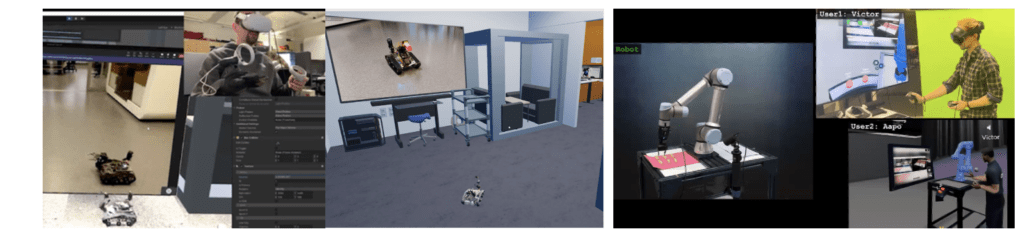

In the first experiments, Unreal and Unity game engines were tested and compared (Österman 2021). The Unity game engine was ultimately chosen as the base as it is widely used among industrial partner businesses and because it can be scaled and used with available resources, both on the client side and hardware side. Several metaverse environments for vocational training and university students were later developed. One of the first environments was developed for collaborative training in harbor operations in ship turnaround and remote-controlled systems (e.g. forklifts, cranes, and trucks). It enables professionals to efficiently train in different scenarios, such as using a gantry crane or a forklift, and to communicate with truck drivers and seafarers. Single-user scenarios provided by third parties, such as lorry reversal exercises and a forklift obstacle course, were brought into the common training environment with basic multi-user elements, where the trainee could be observed (Fig. 5).

Subsequently, this environment was extended for the collaboration between different single-user scenarios, and digital twin integration, focusing on the remote control of a tracked robot using VR headsets and smart gloves. The use of metaverse technology-enabled advanced user interface development leveraging on the cross-platform functionality and application programming interface support: first, an accurately modeled 3D environment matching the physical premises and devices used in the remote operation was created. Then, a video stream of a camera in the real-world environment was injected into a screen of the digital environment. When in operation, this allowed the robot user to observe both the real-world video feed as well as the digital twin representation of the environment, in the same VR environment. Prototyping and testing of the real robot behavior in comparison to the digital twin behavior, could be efficiently executed using this method, as any differences between the digital twin representation and the real-world video would immediately reveal any possible discrepancies (Kaarlela et al. 2023). The digital model could then be adjusted until the behavior of both models would match exactly. VR headsets and smart gloves were needed for efficient operations such as rotations and accurate movements of the robot arm in the 3D environment with digital twin visualization and video information (Fig. 6, left). In the latest robot setup, FIT has focused on a Universal UR5 cobot with more realistic avatars. It is visualized as a digital twin and real-time video information for the users. This setup showcases collaborative elements of the industrial metaverse where digital and real worlds are seamlessly combined (Fig. 6, right).

FIT has also developed a ship command bridge simulation with a focus on photorealistic and immersive simulation, using realistic ocean physics and fluid dynamics (Tarkkanen et al. 2023). In addition, vessels affect each other through the water dynamics, and all ship movements are calculated through forces, and subsequently, the simulation is non-deterministic by nature i.e., the environment and graphics are never the same. One of the system architecture focus areas was modularity, allowing new scenarios to be developed efficiently based on any given specifications. Compared to the harbor environment, one additional objective was to focus on learning analytics where data was collected from eye tracking (e.g. blink rate, gaze direction including observed objects of the environment, and pupil size), ship movements (e.g. locations, speed, directions), interactable objects (behavioristic data from each interactable once operated), and user movements (teleportations inside the environment).

This environment has so far been the most challenging one for the metaverse technology with realistic synchronized ocean physics, fluid dynamics, and interactable objects. As a result, several users can now collaborate in the same virtual environment regardless of their physical location. Seafarer students can train in collaboration and duties. The same objects in the environment can be interacted with by several users at the same time. The use of metaverse technology also enables voice communication and training scenarios with chains of commands. In addition, users and their actions are visible to all other users via avatar representation as illustrated in Figure 7.

To detect and predict seafarer students’ training success, a neural network can be trained. This requires the collection and annotation of a considerable amount of training data, gathered from both successful and failed attempts of the scenario. The training data would consist of simulation data as well as the user’s behavioral data in a time series spanning over the duration of the scenario. Once enough training data is collected and annotated, the neural network could be able to identify the patterns leading to a failure or success, and eventually generate synthetic training attempts, which then leads to a generalized automatic evaluation – a neural network specializing in seafarer training evaluations.

3. Architecture – system integration

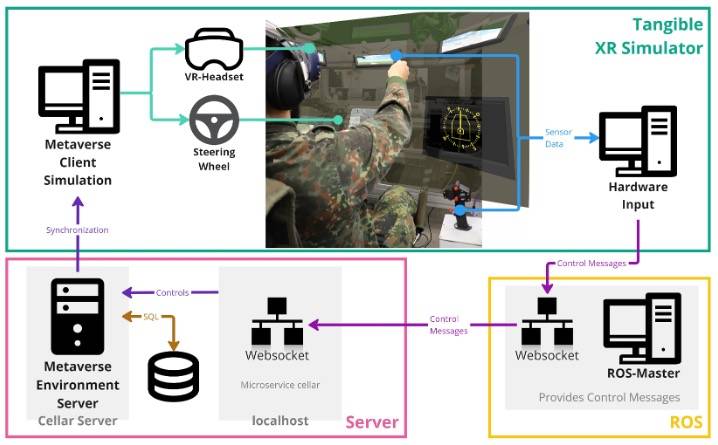

The following section introduces the system architecture (Fig. 8) that enables the Tangible XR simulator at the Fraunhofer FKIE to connect and interact with the Metaverse environment hosted at Turku UAS.

When a user (a member of the tank crew) in the Tangible XR simulator is interacting with the system, for example when the driver is moving the steering wheel, a steering command is generated. The data is then converted to a ROS (Robotic Operation System) message, which is then published by the player simulation at FKIE Wachtberg. The message is then subscribed by the ROS-Master and communicated to the main server, which are both located at the Turku University of Applied Sciences. The movement information is then subsequently sent as server authoritative environment object synchronization messages to the client user simulation PC in Wachtberg, via KCP-ARQ protocol. There, the user experiences the effect of their input in the environment and can conduct their mission or evaluation. The user views the digital interior and environment through a virtual reality headset, which makes the matching of the virtual and the physical location of the interfaces crucial. Hence the recorded data is not only used to affect the model’s movement but also to change the positioning of the interior components, that way if the user adjusts one of the mirrors by manually pushing it back, the digital twin will also appear further back and the view to the exterior changes accordingly. All inputs are recorded and can be combined with analytical tools to support the evaluation of a concept or the training success.

Developing first research prototype

Initially, a simplified digital model of an IFV Puma interior, excluding detailed interfaces such as the battle management system, was shared by the FKIE team, to evaluate the ability to integrate the model into the existing metaverse application. The following step was the creation of an exterior hull for the vehicle by the team in Turku. Once the two models were combined, they were integrated into an existing environment, which already contained the architecture to control multiple vehicles. A necessary adaptation to the existing architecture was a gateway to enable external access to the metaverse servers, by allowing connected users to subscribe to certain topics, in which they can publish messages. The initial concept was to host the ROS Master local to the simulator, however, this would have required an open network configuration which would permit the internal network at the FKIE to be accessed. Due to the military context of the application, it is likely that future applications may also be used within a restricted network, which is why, in the second concept, the ROS Master is hosted on the Turku University of Applied Science’s servers.

The connection was therefore achieved using a ROSbridge WebSocket and a roscore server on the Turku University of Applied Science’s servers. These can be accessed from outside of the local network and allow connected users to subscribe to certain topics, in which they can publish messages. In this case, every tank has a different topic, and the output from the joystick was published on the topic of the tank that had to be controlled. In the Turku UAS industrial metaverse environment, a ROS Connector was implemented. It subscribes to the topics of the tanks and listens to incoming commands to then forward them to the vehicles and initiate the desired movements. The system gives priority to users of the platform, meaning that the tanks can only be remotely operated when there is no one in the driver’s seat controlling them in the virtual environment. However, if someone is in a different seat (for example the weapons systems operator), the tanks can still be controlled remotely. This research prototype was first tested locally using the arrows of the computer and a custom client application in Unity that sent similar commands to those of the real joystick.

To test this setup, the data recorded from a controller was sent as a ROS Message directly to the Turku UAS servers via Websocket tunneling, no longer requiring the internal network to be accessed. Using this method, the team in Wachtberg was able to use the controller to remotely control the movement of the model in the virtual environment hosted in Turku. Proving that, using the jointly developed system, remote inputs, and interactions in the Turku UAS metaverse environment can be achieved, replicated, and scaled. As the ROS messages are identical to those used in the simulator setup, the next step was linking the steering wheel and pedal inputs with the virtual model. Subsequently, the driver position of the FKIE Tangible XR Simulator was fully integrated into the metaverse application. This setup was then demonstrated as part of the annual AR, VR, and MR specialist meeting of the German armed forces, with the driver located in Germany and the commander in Finland. The system architecture was adapted to allow the users to connect to specific positions in the simulator. Typically, the metaverse permits users to change between different roles whilst in the virtual environment, this initial setup, however, limits the user to a single role. The demonstration proved that a decentralized crew can use the setup to control a single vehicle via the metaverse as a common interface.

4. Results

The first trials demonstrated that the current metaverse platform supports the integration of remote controls and a ROS connection can be implemented using a WebSocket in addition to already existing MQTT–based remote communication with a production SSL certificate, the WebSocket endpoint can be configured to support native encryption, which is essential for transmitting classified data streams.

The trials also indicate, that expanding the remote control operations towards several input devices would be possible with two architecture design choices: parallel WebSocket tunneling (using several individual WebSocket endpoints) and serial (using one WebSocket tunnel where remote control data of all input devices are transmitted in serial (binary array). WebSocket tunneling supports full-duplex data streaming: one future advancement would be sending information from the environment back toward the devices that are connected via the tunnel, independent of the data streams traveling toward the environment.

The latency observed in round-trip operation from FKIE to Turku UAS was in the range of 60 – 70 milliseconds. However, the measurements were done only for the complete roundtrip of environment server-client synchronization, not including the ROS message and tunneling delays. Further research and metrics collection would be required in this field. The delays observed during the experiment indicate positive prospects for further research and indicate that multi-user training operations in Metaverse environments are possible and scalable.

5. Conclusions

The initial demonstrations proved the ability to connect a simulator based on the tangible XR method with the metaverse environment to control a vehicle or other integrated systems.

The system can now be extended to further functions, roles, and vehicles to be used for training purposes, for example enabling NATO members to practice joint operations at a fraction of the cost and effort that traditional training requires. Using this method, novel doctrines or scenarios can be developed and taught to a large number of military personnel simultaneously in separate locations and through different means of accessing the virtual environment, depending on the aim of the training. Beyond the development of new tactics and behavior, the presented method could support the design and testing of new interfaces and entire interiors of vehicles. Due to technology and vehicles being shared amongst partners, either in the NATO or the EDA, users from different backgrounds must be able to use the same interfaces. The concept is not limited to a tangible XR simulator, a wide range of access methods could be integrated and combined with the metaverse, enabling researchers and engineers to rapidly adapt concepts and test iterations with larger numbers of participants. To facilitate the integration, the different training methods and simulation capabilities will be considered as part of the following steps.

As a training and development tool, assessing the results within the system, which for example using the aforementioned neural networks, could enhance the quality significantly. Further studies could investigate the soldiers’ situation-awareness and cognitive load during collaborative scenarios when using the proposed system and use the results to not only improve it but potentially develop a self-training system, that challenges or supports the user based on their specific needs.

In the future, an application in the collaborative control of remote-controlled units from independent locations could be possible. This would allow users from different geolocations to connect as a single crew inside a command station and to operate in their known environment but from a safe distance to the operational area.

A further aim is to expand the research project to further nations within the NATO network to investigate the potential of joint military training and the development of transnational doctrines. The varying security and safety measures adopted by different nations are also relevant to the proposed system and should be considered in future developments.

Funding

This research was funded by the German Ministry of Defense and the German Army (BAAINBw) through the project MESiKa – Methodenentwicklung für die Evaluation von Sichtunterstützungssystemen in Kampfräumen (E/K2AA/MA071/HF029) and the Finnish Ministry of Education and Culture under the research profile for funding for the project “Applied Research Platform for Autonomous Systems” (diary number OKM/8/524/2020).

References

- 1. Gartner. What’s New in the 2022 Gartner Hype Cycle for Emerging Technologies, August 10, 2022. Available online: https://www.gartner.com/en/articles/what-s-new-in-the-2022-gartner-hype-cycle-for-emerging-technologies

- 2. Fortune. Citi says metaverse economy could be worth $13 trillion by 2030, April 1, 2022. Available online: https://fortune.com/2022/04/01/citi-metaverse-economy-13-trillion-2030/

- 3. CNET. Why Apple Vision Pro’s $3,499 Price Makes More Sense Than You Think? June 8, 2023. Available online: https://www.cnet.com/tech/computing/why-apple-vision-pros-3500-price-makes-more-sense-than-you-think/ (accessed on 3 July 2023).

- 4. Nokia OYj. Metaverse Explained. 2023. Available online: https://www.nokia.com/about-us/newsroom/articles/metaverseexplained/ (accessed on 9 April 2023).

- 5. Kang, J.; Ye, D.; Nie, J.; Xiao, J.; Deng, X.; Wang, S.; Xiong, Z.; Yu, R.; Niyato, D. Blockchain-based Federated Learning for Industrial Metaverses: Incentive Scheme with Optimal AoI. In Proceedings of the 2022 IEEE International Conference on Blockchain (Blockchain), Espoo, Finland, 22–25 August 2022; pp. 71–78.

- 6. Lee, J.; Kundu, P. Integrated cyber-physical systems and industrial metaverse for remote manufacturing. Manuf. Lett. 2022, 34, 12–15.

- 7 .Mourtzis, D., Panopoulos, N., Angelopoulos, J., Wang, B., and Wang, L., Human centric platforms for personalized value creation in metaverse, Journal of Manufacturing Systems, Volume 65, 2022, Pages 653-659, DOI = https://doi.org/10.1016/j.jmsy.2022.11.004

- 8. Mourtzis, D. The Metaverse in Industry 5.0: A Human-Centric Approach towards Personalized Value Creation. Encyclopedia 2023, 3, 1105-1120. https://doi.org/10.3390/encyclopedia3030080

- 9. NVIDIA, BMW Group Starts Global Rollout of NVIDIA Omniverse. March 21, 2023. Available online: https://blogs.nvidia.com/blog/bmw-group-nvidia-omniverse/.

- 10. Bohemia Interactive Simulations. Available online: https://bisimulations.com/products/vbs4 (accessed on 3 July 2023).

- 11. Bundeswehr. Von Virtual Battle Space 3 in die Praxis. April 4, 2021. Available online: https://www.bundeswehr.de/de/organisation/streitkraeftebasis/aktuelles/von-virtual-battle-space-3-in-die-praxis-5050618 (accessed on 3 July 2023).

- 12. Bundeswehr. Im Video: WTDWehrtechnische Dienststelle 91 erprobt das digitale Gefecht mit Virtual Reality. June 7, 2021. Availble online: https://www.bundeswehr.de/de/organisation/ausruestung-baainbw/aktuelles/im-video-wtd-91-erprobt-das-digitale-gefecht-mit-virtual-reality-5085994 (accessed on 3 July 2023).

- 13. XR4All. Definition – What Is XR?, XR4All Horizon 2020, Available online: https://xr4europe.eu/whatisxr/ (accessed on 3 July 2023).

- 14. Das, S., Franguiadakis, T., Papka, M., Defanti, T.A. & Sandin, D.J. A Genetic Programming Application in Virtual Reality. In Proceedings of the 1994 IEEE 3rd International Fuzzy Systems Conference, Orlando, FL, USA, 26–29 June 1994, pp. 480–484.

- 15. Zhou, F., Duh, H.B.-L. & Billinghurst, M. Trends in Augmented Reality Tracking, Interaction and Display: A Review of Ten Years of ISMAR. In Proceedings of the 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, 15–18 September 2008; pp. 193–202.

- 16. Nevelsteen, K.J. (2017) Virtual World, Defined from a Technological Perspective, and Applied to Video Games, Mixed Reality and the Metaverse. Computer Animation and Virtual Worlds, Vol. 29(1) / e1752.

- 17. Baltzer, M. López, D. Bielecki, K. & Flemisch, F. Agile Development and Testing of Weapon Systems with Tangible eXtended Reality (tangibleXR). In: Military Scientific Research Annual Report 2021, Federal Ministry of Defence, 2022.

- 18. Milgram, P. & Kishino, F. (1994), A Taxonomy of Mixer Reality Visual Displays, IEICE Transactions on Information Systems, Vol E77-D, No. 12, pp. 1321–1329.

- 19. Slater, M. Immersion and The Illusion of Presence in Virtual Reality. British Journal of Psychology, Vol. 109(3), 2018, pp. 431-433.

- 20. Kim, G. & Biocca, F. Immersion in Virtual Reality Can Increase Exercise Motivation and Physical Performance. In: Virtual, Augmented and Mixed Reality: Applications in Health, Cultural Heritage, and Industry: 10th International Conference, VAMR 2018, Held as Part of HCI International 2018, Las Vegas, NV, USA, July 15-20, 2018, Proceedings, Part II 10, 2018, pp. 94-102.

- 21. Berkman, M.I. & Akan, E. Presence and Immersion in Virtual Reality. In: Lee, N. (eds) Encyclopedia of Computer Graphics and Games. Springer, Cham, 2019, Available online: https://doi.org/10.1007/978-3-319-08234-9_162-1 (accessed on 3 July 2023).

- 22. Wasser, J., Parkes, A., Diels, C., Tovey, M. & Baxendale, A Human Centred Design of First and Last Mile Mobility Vehicles, PhD Thesis, Coentry University, February, 2020. Available online: https://pure.coventry.ac.uk/ws/portalfiles/portal/38014891/Wasser_PhD.pdf (accessed on 3 July 2023).

- 23. López-Hernández, D., Bloch, M., Bielecki, K., Schmidt, R., Baltzer, M. C. A. & Flemisch, F.: Tangible VR Multi-user Simulation Methodology for a Balanced Human System Integration. In: Advances in Simulation and Digital Human Modeling. 2020, pp. 183–189.

- 24. Luimula, M., Haavisto, T., Pham, D., Markopoulos, P., Aho, J., Markopoulos, E. & Saarinen, J. The Use of Metaverse in Maritime Sector – a Combination of Social Communication, Hands on Experiencing and Digital Twins. In: Evangelos Markopoulos, Ravindra S. Goonetilleke and Yan Luximon (eds.). Creativity, Innovation and Entrepreneurship. AHFE International Conference 2022. AHFE Open Access, 31. AHFE International, New York, USA, 24-28 July 2022, pp. 115-123.

- 25. Österman, M. Development of a virtual reality conference application. Bachelor’s Thesis in Information and Communications Technology. Turku University of Applied Sciences, 2021. Available online: https://www.theseus.fi/handle/10024/503216

- 26. Kaarlela, T., Pitkäaho, T., Pieskä, S., Padrão, P., Bobadilla, L., Tikanmäki, M., Haavisto, T., Blanco Bataller, V., Laivuori, N. & Luimula, M. Towards Metaverse: Utilizing Extended Reality and Digital Twins to Control Robotic Systems. Actuators, Vol12(6), Article 219, 2023, 20p.

- 27. Tarkkanen, K., Saarinen, J., Luimula, M. & Haavisto, T. Pragmatic and Hedonic Experience of Virtual Maritime Simulator, In: Proceeding of the 7th Annual International GamiFIN conference, Levi, Finland, April 18-21, 2023, pp. 106-112.